To Convert Decimal to ASCII here are the basic principles:

- Identify the decimal value that needs to be converted.

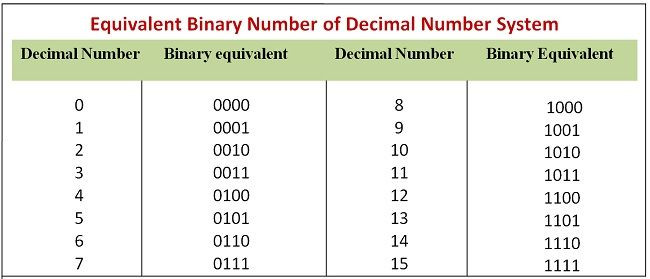

- Convert the decimal value to its binary representation.

- Divide the binary value into groups of 8 bits (1 byte).

- Convert each byte to its decimal representation.

- Look up the corresponding ASCII character for each decimal value.

- Combine the ASCII characters to create the text representation of the original decimal value.

Explanation:

- The decimal value is the numerical value that needs to be converted.

- Converting the decimal value to its binary representation is necessary because ASCII uses a binary representation of characters.

- Dividing the binary value into groups of 8 bits is necessary because ASCII uses 1 byte to represent each character.

- Converting each byte to its decimal representation is necessary because the ASCII table uses decimal values to represent characters.

- Looking up the corresponding ASCII character for each decimal value is necessary because the ASCII table maps each decimal value to a specific character.

- Combining the ASCII characters to create the text representation of the original decimal value is the final step to obtain the ASCII representation of the original decimal value.

In programming, the conversion from decimal to ASCII can be implemented using built-in functions or by writing custom code. For example, in Python, the built-in function chr() can be used to convert a decimal value to its corresponding ASCII character.

Overall, understanding decimal to ASCII conversion is important for anyone working in computer science, data analysis, or communication protocols. By knowing how to convert between these two representations, individuals can more effectively analyze and communicate data in text format.

James is a software engineer and founder of the website “Decimal to ASCII”. He has a passion for technology and programming and has been in the industry for over a decade. With a strong focus on user experience and efficiency, “Decimal to ASCII” is a reliable and user-friendly tool for converting decimal to ASCII codes.